I’ve been wanting to make this for a while, but here you are: the one-stop guide to AI in 2025.

Introduction

Who am I?

I’m Nikhil Anand, currently working as a Research Associate at Adobe Research, Bangalore. I went to IIT Madras, where I picked up a lot of the fundamental concepts of AI and worked on a bunch of projects.

I really like explaining AI concepts and think AI research will keep getting more and more important with time, so I hope to make some sort of a dent by educating people on it. I started explaining stuff on Medium before coming here.

Who is this guide for?

Too many people write about AI on the internet, but they often overlook prerequisites that aren’t trivial.

I believe the future holds a lot for people who know AI and can work with it.

My goal with this guide is to help anyone, from complete beginners to seasoned pros, develop skills to work on modern AI applications and make waves.

We’ll skip the dusty theory overload taught in school and stick to the concepts you truly need today, with clear, intuitive examples that make them click.

How to read this guide

I’ve provided a list of topics in an order that I would in a book — starting with easier concepts and slowly moving to more advanced concepts.

If you’re a complete beginner, I would advise you to cover the first few blogs first before going on to the more advanced ones.

If you’re slightly more experienced, feel free to check out the blogs however you’d like.

Some of the topics say “coming soon” — I will be writing on those very soon and will edit those links in!

But if you’re interested in any particular topic or want me to include one, let me know in the comments!

Introductory Math and ML

An intro to vector, matrices, and what they mean in AI

In this blog, I explain everything you need to know about vectors and matrices in a super intuitive way, and why properly understanding them is often the bottleneck to understanding AI in depth.

In this post, I introduce the concept of optimisation, the core idea that drives all of machine learning. You’ll learn how it connects to everyday problems, and why mastering it gives you a deep understanding of how AI learns.

A trip to the depths of gradient descent

In this post, I break down how gradient descent, a core optimisation algorithm, actually works, both exhaustively and intuitively. Starting from simple optimisation problems, we’ll explore gradient descent from the ground up, building a deep, fundamental understanding of how it powers machine learning systems as they learn.

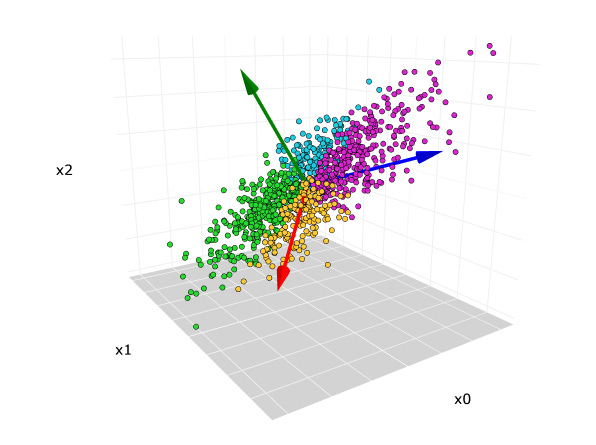

Why PCA is still crucial for compression

Here, I explain the ML concept of PCA and how it’s really just an extension of simple linear algebra. It’s elegantly simple yet continues to help us with efficiency of AI systems saving thousands of dollars.

What is linear regression and why is it still important?

This is an introduction to the fundamentals of linear regression. We explore concepts mathematically but in a super intuitive and visual way to make things exhaustive yet easy to grasp.Regularisation in linear regression and how the concept still used in modern neural nets too (coming soon)

Deep Learning Concepts

The neuron: the simplest unit of what today’s LLMs are made of (coming soon)

How are neural networks trained? (specifically MLPs) (coming soon)

The story of vision models and where it all started (coming soon)

RNNs: The king of language models before the transformer (coming soon)

Autoencoders and their huge relevance in research today (coming soon)

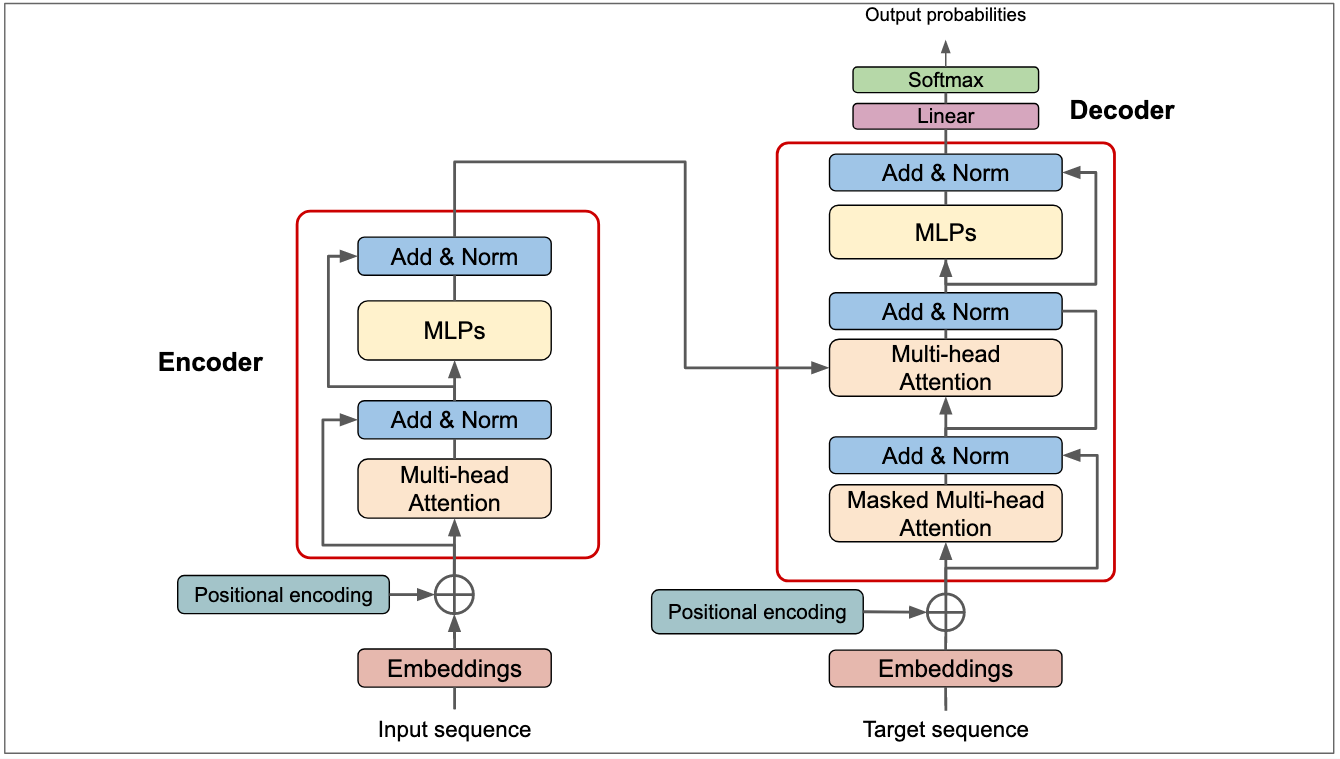

The ultimate guide to transformer architecture (coming soon)

Self-attention, where it started, how it works (coming soon)

How are LLMs trained? (coming soon)

Advanced Concepts

Interpretability

Interpretability is all about figuring out what’s going on inside LLMs and why they behave the way they do. I’ll start with some introductory blogs and move on to more niche ones.

Mechanistic interpretability is set to be the most important skill in tech.

Read this blog for an introduction to the field of mechanistic interpretability and why it’s set to become the most important field in tech very soon, and why AI safety is becoming increasingly important for the world in the future.

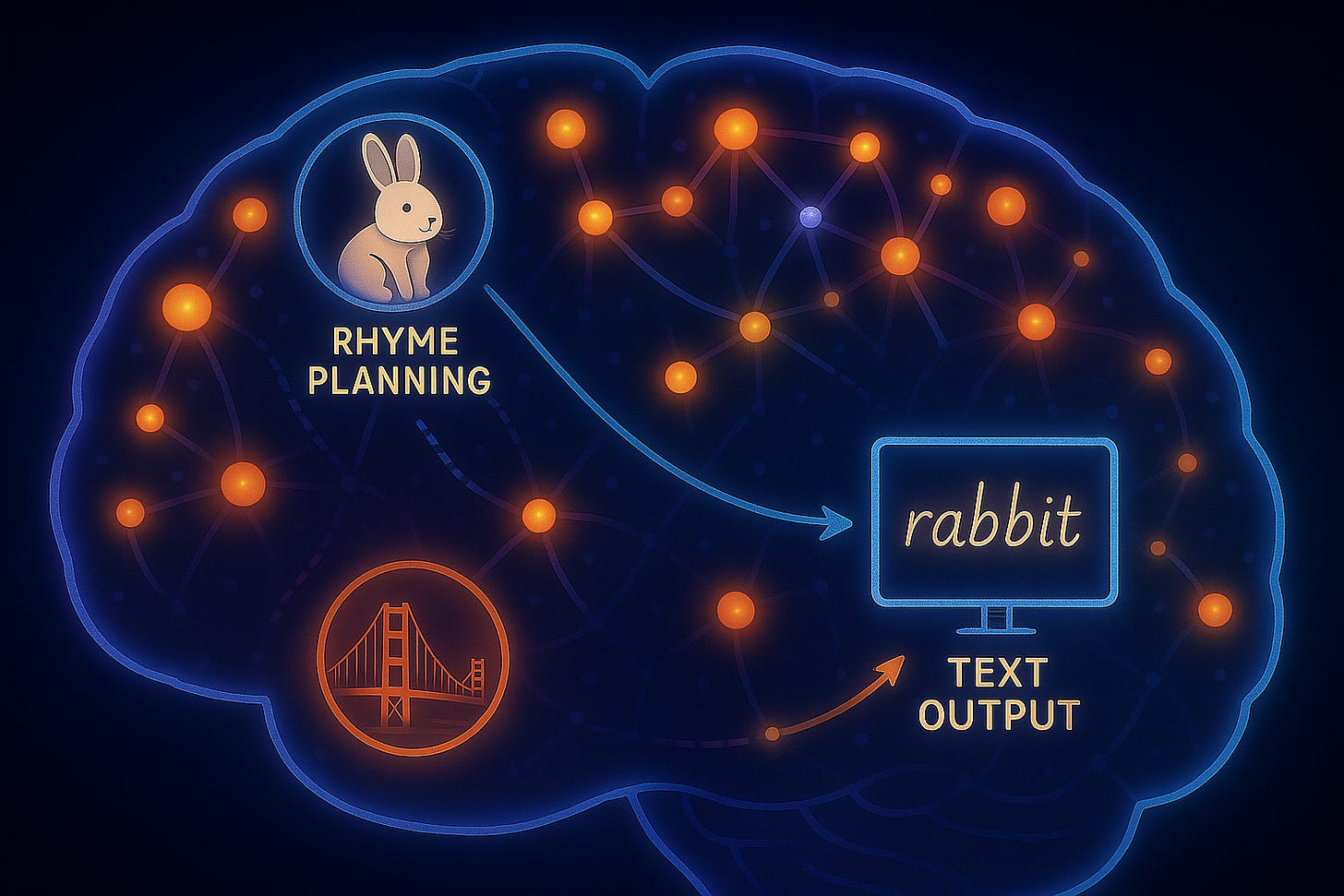

Where do LLMs keep their memories?

This one covers a technique called Causal Mediation Analysis and how it can be used to figure out where LLMs keep their memories.

This group edits facts in an LLM’s memory

Here, we talk about how the same research group that figured out where memories are stored in LLMs was able to edit those facts. For instance, they could find the fact “The Eiffel Tower is in Paris” and replace Paris with New York.

How RAG LLMs “copy-paste” information using attention heads called “retrieval heads”

Here, I talk about specific attention heads in the transformer architecture that copy-paste information from the context through an intriguing but intuitive mechanism.

How attention shapes understanding in RAG LLMs

Here, we talk about the concept of attention, and how it is crucial for LLMs to abide by the context in contextual question-answering.

Your LLM knows when it’s lying to you.

Here, we cover an exciting study that proved that the internals of the LLM have clues that an LLM is lying to you even when it claims to be telling the truth through its words.

Activation steering

This is also technically a subset of interpretability, but I’ve got a couple of blogs on this topic, so I’ve made this its own section.

Understanding “steering” in LLMs.

I cover the basics of steering and how it’s similar to a famous concept in NLP.

A comprehensive guide to how I made my LLM’s outputs 200% better.

Here, I cover several methods including contrastive decoding, activation steering and clever prompting to improve my LLM’s performance.

Circuit Tracing (Anthropic)

This section consists of several blogs I’ve written about the circuit tracing technique, each going deeper and deeper into the technique.

What’s going on inside Claude’s mind?

In this blog, I explain the replacement model, the basics of what transcoders do, and how Anthropic mapped specific transcoder features to human-interpretable concepts in a broad but shallow overview of the field.

A simple ML concept that helped Anthropic map Claude’s brain

Here, we discuss a simple ML concept, sparsity, and how that concept was super crucial in a recent application involving mapping Claude’s brain.

I broke down Anthropic’s thought-tracing trick to its core

Here, I go much deeper into what transcoders are, and explain exactly why they’re necessary to both map features to concepts AND figure out relationships between the features that allow us to trace the path of thoughts in the LLM.

RL-finetuning (DeepSeek)

Finetuning with RL became popular in reasoning models since the release of DeepSeek.

Why DeepSeek might’ve just revealed the path to AGI

We cover the precise details of DeepSeek’s RL strategy, how it’s different from other methods, and why it could potentially mimic human learning and possibly take us to AGI.

LLMs sucked at understanding images before this happened.

In this blog, we talk about LlaVA, a vision-language model, and the process it follows to finetuning it that made it such a game changer with vision-language models.

Can we make an LLM that both “sees” and “reasons”?

In this blog, we devise an RL training methodology for vision-language models to get better at reasoning the same way DeepSeek was trained using an RL model for improving some contextual quality.

How to make your own software engineer (like Devin)

In this blog, I cover the steps to running RL training in order to create an AI software engineer like Devin.

Decoding methods

What is logit lens and why is it useful?

Here, I cover a concept called logit lens and how it can be used in interpretability. I also show you how to code it up.

Making LLMs more truthful with DoLA (Part I)

In this blog, I explain how a useful decoding method called DoLA can be used to make LLMs more truthful.

5 insights that helped a research group 2X its LLM’s performance

Here, we cover 5 unique LLM concepts, including contrastive decoding, that put together helped a research group improve it’s LLM’s performance by 2X.

Agents

Make Excel 100x easier with AI

Here, I cover a 3-agent AI workflow that can make using Excel a lot easier.

LLM Optimization

KV Cache: The Hidden Optimization Every LLM Uses

This blog is all about what KV Cache is, and how this simple yet powerful optimization drastically speeds up inference while cutting compute costs, making it one of the key tricks in modern LLM optimization.

Conclusion

The guide is still in a nascent stage and I haven’t covered a lot of the basics yet — I will be filling up the spaces very soon, and the guide will continue to evolve over time. Let me know in the comments if you want me to cover a particular topic first.

Drop a comment to share how you’d use this guide!

The advanced concepts are all filled in but for a person like me who wants to start from scratch there is not much content yet. I highly recommend and it would be very helpful though to add more fundamental concepts.